Our world is more connected than ever. It is now common for people to have smart devices that create and collect data throughout their home or office. These devices often include voice assistants that perform basic tasks when prompted with a verbal command. Voice assistants can set reminders, play music, or provide news and weather reports. They can also order products and read texts or emails. However, all tasks require the devices to listen.

So, is it safe to use voice assistants if they are always listening?

Despite living in a digital world, many people still prefer to protect their personal and private data. That concern causes some to forego a voice assistant for safety reasons.

Yet, 76% of respondents in our survey stated they use these devices.

We asked over one thousand Americans to gauge their overall interest and knowledge of increasingly popular voice assistants.

Key Findings:

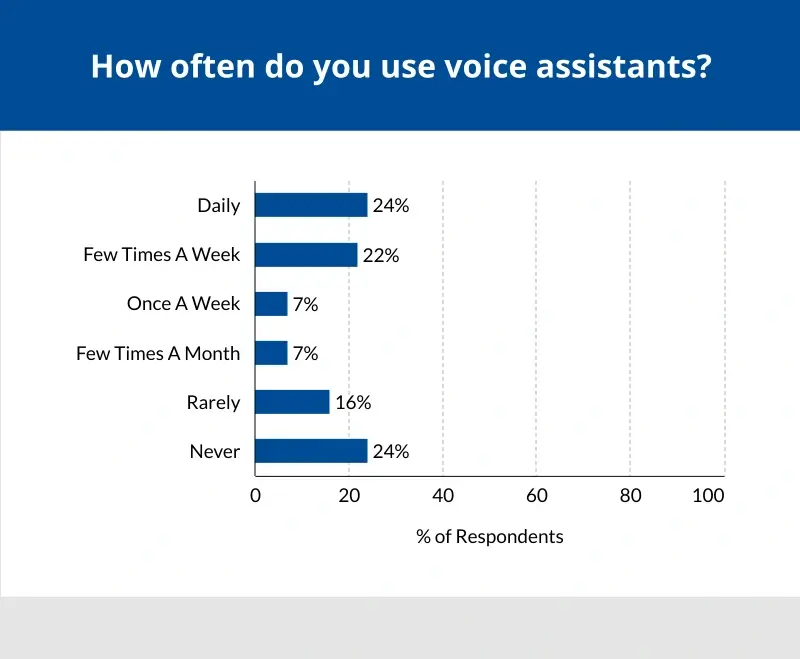

- 24% of people shared that they utilize a voice assistant on a daily basis. Another 22% noted that they use voice assistants a few times per week. Conversely, 16% said they rarely asked the devices anything. 24% revealed they have never used a voice assistant.

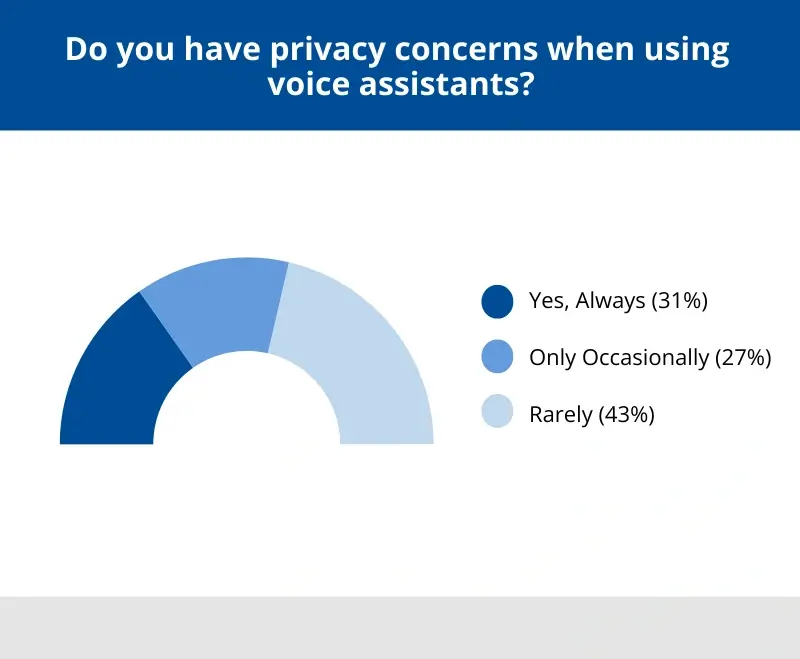

- 31% of respondents expressed privacy concerns when using voice assistants. In comparison, 27% of people surveyed reported occasional concerns. 43% disclosed they never have privacy concerns.

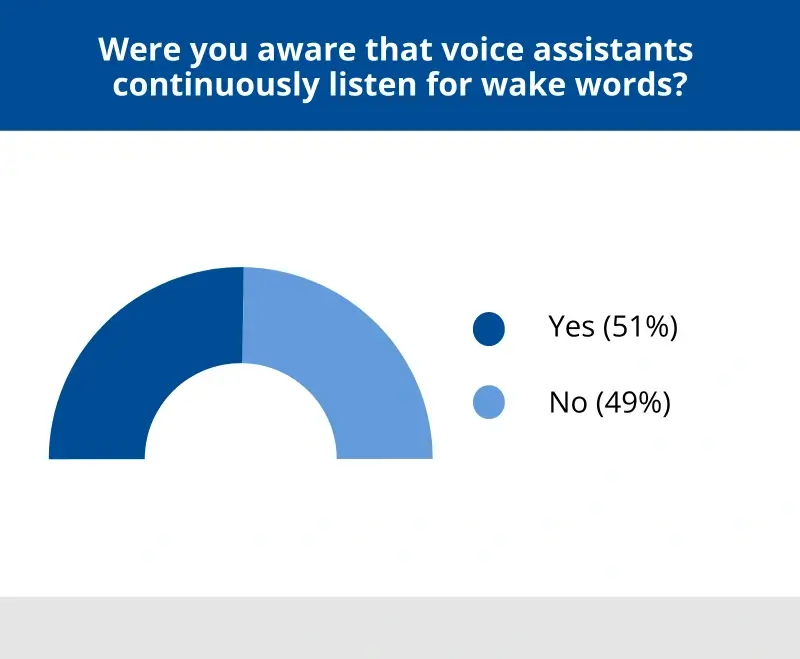

- 49% of participants revealed they did not know that voice assistants continuously listen for wake words.

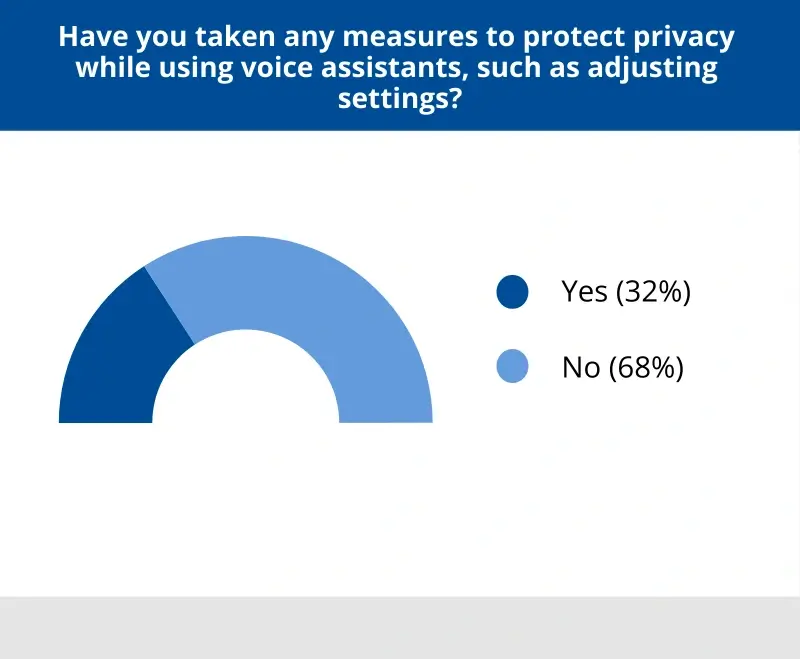

- 68% of respondents stated they have never taken any measures to improve the privacy of voice assistants.

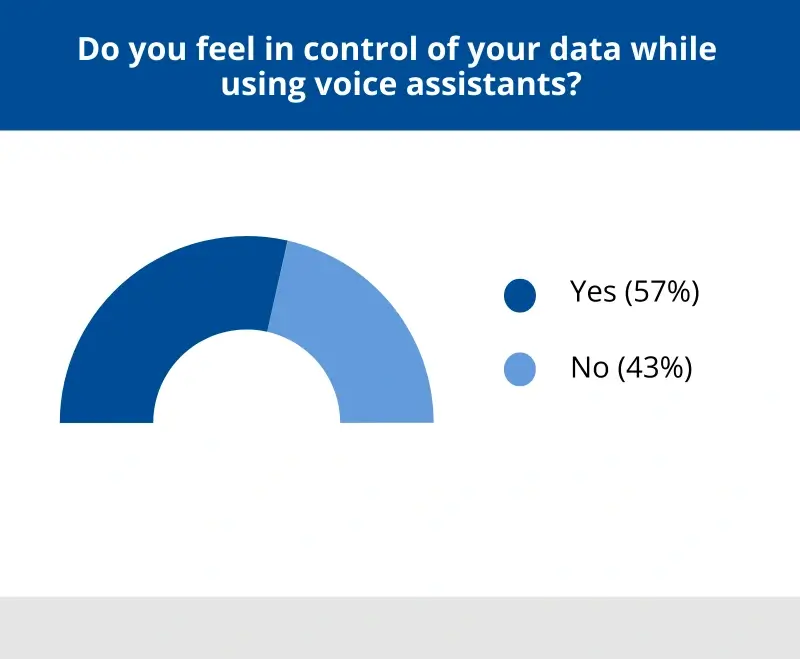

- Despite that, 57% of those surveyed indicated they feel in control of their data when they use voice assistants.

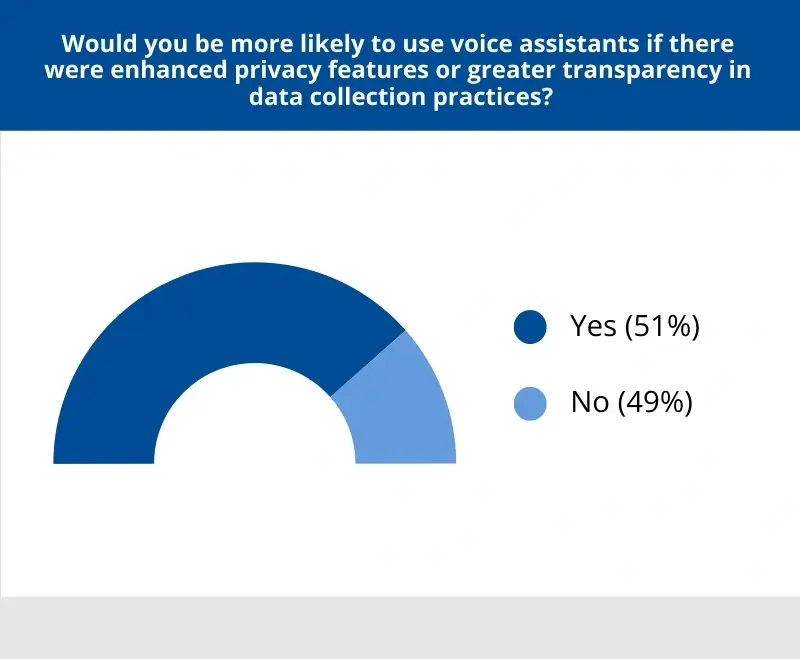

- 77% of those polled claimed they would be more likely to use voice assistants with enhanced privacy features and greater transparency.

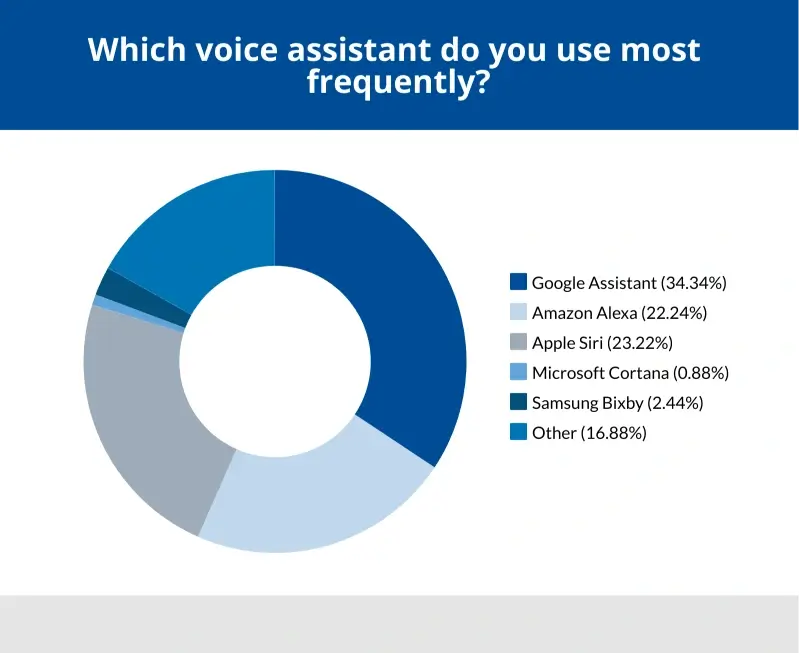

- Google Assistant was the most popular at 34% of respondents. 23% favored Siri and 22% expressed a preference for Alexa.

Our findings suggest the public does not realize the scope of personal data that voice assistants can collect and share.

In this article, we explain how these smart devices actually work, including Siri, Google Assistant, and Alexa. Plus, we offer tips to avoid excessive collection if you opt for a virtual assistant in your home or workplace.

How Voice Assistants Work

Voice assistants run on speech recognition, natural language processing (NLP), machine learning, and cloud computing technologies.

Here is a simple answer for how voice assistants work:

The voice assistant passively listens to ambient sounds for a wake word. This word or phrase alerts the device that the user wants to give a command. When the virtual assistant hears the wake word, it engages. After recording the user’s request, the device converts the speech to text or transmits the raw audio to the cloud. At this point, algorithms in the cloud process the text to determine the user’s intent. Those algorithms retrieve the relevant data, generate a text-to-speech response, and relay that to the device. Once the voice assistant receives the data, it responds to the user and then returns to standby mode.

Each step is complex and requires companies to manage massive amounts of data.

Let’s take a deeper look at the listening process and company policies to better understand the privacy concerns of assistants.

Listening Process

As explained, the voice assistant is a careful listener. During the passive listening process, the device analyzes all background noise, searching for sounds that match its assigned wake word. Nearly half of those surveyed did not realize that voice assistants are always listening.

Since the assistant spends most of its time in this state, it is designed to conserve power and storage. Therefore, they do not record or transmit audio while passively listening.

When the assistant detects the wake word, it activates its microphone and starts to record the user’s speech. Often, the device signals the switch to active listening with a visual cue, such as animation or lighting. The exact cue will depend on the model being used.

After the virtual assistant records and sends the command to the cloud, it waits for the response. In the span of seconds, the cloud creates the output and delivers it to the device. The assistant fulfills the user’s request and transitions back to passive listening.

The listening process of a voice assistant reflects the pursuit of a seamless user experience.

Let’s outline the data collection practices that occur during these interactions.

Data Collection Practices

Voice data is a clear issue for most people when it comes to a virtual assistant. In our survey, almost 60% of respondents were at least occasionally concerned about privacy when using voice assistants.

For starters, voice data consists of the user’s recorded commands or queries. Companies mainly use voice data to process requests with NLP algorithms and then perform the task. However, it also serves a purpose in the machine learning aspect of assistants.

Machine learning algorithms create random, anonymized sets of voice data over time. The technique allows the smart device to review and refine its process to better recognize patterns and understand language. This diverse data also helps train new models in the future. The feedback loop continues, enabling each tech giant to provide more responsive products.

As a result, users worry that assistants record them and send the audio to an offsite server or third party. For a good reason. You seemingly lose control of that data. And the device collects more than just voice data.

The following list explains the other data that voice assistants might collect and how companies use it:

- Device Data: Notes the hardware and software of the device. It also includes metadata like timestamps for the date, time, and duration of an interaction.

- Geolocation Data: Informs algorithms in the cloud to provide a response that considers the user’s current location.

- Contextual Data: Gives extra details on usage and preference. This data tracks patterns and context within a session with follow-up questions. Companies can also use it to adapt to an accent or tailor answers to a person.

- Performance Data: Allows product teams to assess response time and relevance to enhance the device.

- Diagnostic Data: Sends data from the device when an error occurs. Techs use the report to understand the problem and resolve the issue.

Companies collect this data to create a full picture of how the device works and what can still be improved.

But, even beyond data collection, you should know your device’s storage and retention policy.

Security Measures and Data Retention Policies

Security measures and retention policies differ among companies that offer voice assistants. These terms describe the policies for sending and storing data from the device. 57% of participants in our survey relayed that they felt in control of their data while using voice assistants.

Here is a broad outline of how companies might handle that data:

Before sending the command, the device contacts the cloud and completes a handshake. This process determines how data will travel across networks. Some of the networks are public. To safeguard you, most companies encrypt data in transit to prevent unauthorized access.

Within seconds, the data arrives in the cloud. The server decrypts the command, fulfills the request, and then encrypts the response for the device. Therefore, your data is secure on each leg of its journey.

But what about data at rest?

Many companies also encrypt data that stays on their servers for prolonged periods. This storage includes anonymized voice data used to improve the smart device or train new models. Encrypting stored data adds another layer of protection.

Customers do not want their recordings stored forever, though. To ease those concerns, many companies limit what data they can retain and how long they can keep it. These limits balance the need for useful data to improve the voice assistant without infringing on your privacy rights.

In addition, smart devices often feature user controls for those who are conscious of their privacy. User controls provide more freedom to manage your data. The options range from deleting recordings stored in the cloud to even restricting what data companies collect.

Still, despite encryption and user control settings, there are plenty of skeptics of voice assistants.

Privacy Concerns

People are skeptical of smart devices for three main reasons.

First and foremost, some people just do not trust Big Tech. Especially with a device that can begin recording at any time. These doubts present themselves in several ways, but the bottom line is they suspect companies are not completely truthful.

Those concerns may not be baseless.

In 2023, Amazon agreed to pay a $25 million penalty after the Federal Trade Commission (FTC) alleged they violated a child privacy law. The FTC argued Amazon deceived users and kept years of data obtained by their Alexa voice assistant despite deletion requests. As part of the action, the FTC ordered Amazon to overhaul how it handles voice and location data.

Others worry about the accidental activation of a smart device. Sometimes, the device detects a similar sound to its wake word and starts to record. These activations can result in longer audio clips than a normal interaction.

In 2019, a contractor for a firm that grades Apple devices claimed accidental Siri activations can capture private moments. Apple stated that less than 1% of Siri activations are graded and all reviews adhere to their strict privacy standards. A week later, Apple pledged to reform their grading system.

Yet Apple was not alone. In 2021, a federal judge ruled Alphabet Inc. must face a class-action lawsuit related to their voice assistant. The lawsuit claims Google collected data from accidental activations and exposed it to advertisers. The judge certified the class in December 2023.

Lastly, some people are concerned about remote activation. Certain smart devices allow remote control through a companion app. If configured, users can do anything from adjusting the thermostat or turning off the lights after leaving home. Though rare, bad actors can exploit settings to bypass measures and gain access, as seen through Microsoft’s Cortana in 2018.

Each of these concerns demands attention to secure your sensitive data. Yet, 68% of participants report they have never taken measures to protect their privacy.

That raises the question: By default, how much does your smart device actually know?

Assessment of Popular Voice Assistants

Knowledge is often power when it comes to smart devices and protecting private data.

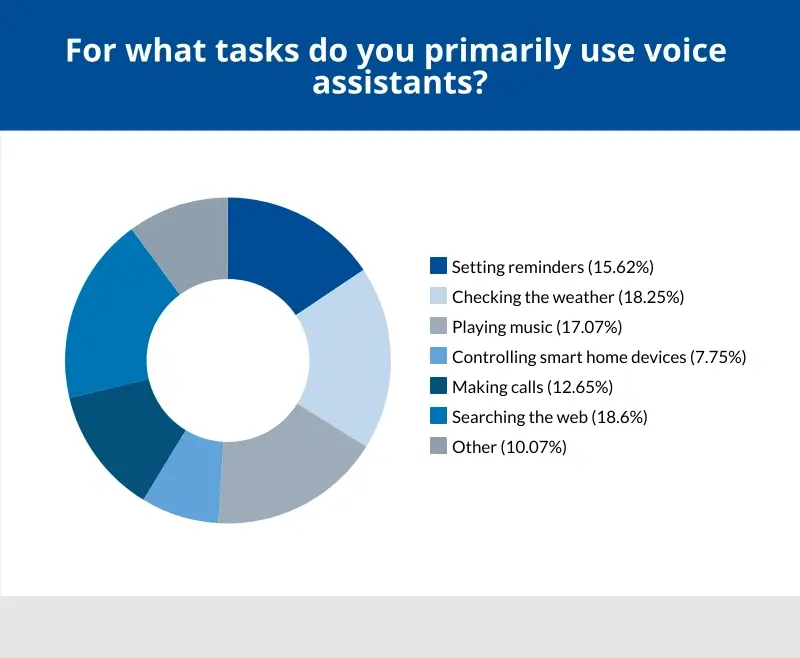

These charts show what voice assistants people choose most frequently and how they use them:

All companies assert that nothing happens while your voice assistant is in standby mode. Which is true, as far as we know.

However, your data exists beyond each of these interactions. That fact escapes many people who do not have the time to read through lengthy legal agreements.

The following table offers an overview of how popular voice assistants collect and use data:

| Data Collection and Usage Practices of Smart Devices | ||||

|---|---|---|---|---|

| Assistant | Sell Data | Track Data | Secure Data | Control Data |

| Siri | No | No | Yes | Yes |

| Google Assistant |

No | Yes | Yes | Yes |

| Alexa | Yes | Yes | Unclear | Unclear |

| Bixby | Unclear | Yes | Unclear | Unclear |

| Cortana | Unclear | Yes | Yes | Unclear |

Control data is a measure of whether users can delete their data or request information on its usage.

Secure data is a measure of the product’s safety features, including encryption standards and disclosure policies for data breaches.

Sell data is a measure of whether the product shares collected data with third-party brokers or advertising partners.

Track users is a measure of whether the product uses collected data to build a profile for targeted ads.

Unclear indicates the company’s policy did not plainly express privacy rights, usage practices, or security features.

Below, we summarize the important policies of each voice assistant so you can make the best decision for yourself.

What We Know About Siri

General Information

Popular Devices with Siri:

- iPhones

- iPads

- Macs

- Apple Watches

- AirPods

- HomePod.

Default Wake Words: “Siri” or “Hey Siri”.

Privacy Policy (July 2024)

Apple is known for its transparency and commitment to privacy. In many regards, they set the standard and continue to improve Siri’s privacy protections. As mentioned above, Apple limits data collection when using Siri. By default, the voice assistant collects as little data as possible to fulfill the request. This approach has even caused some critics to claim Siri lacks context compared to other assistants.

Also, Apple’s ecosystem of smart devices now has default opt-outs for human grading. Users must opt-in to share voice data with Apple for quality purposes.

In addition, Apple uses random identifiers for data collected from Siri. This technique separates the long string of letters and digits from your Apple ID or phone number. As a result, Apple insulates users from third-party data brokers and advertisers.

Apple changed how requests are processed. If able, Siri processes the command on the device itself. That method means many voice inputs are never sent to an Apple server, removing the potential for mishandling. Siri still sends voice data to servers for more complex commands. You can see how the assistant processed each request in Siri Settings. Apple stores a text transcript of interactions in the cloud in all cases.

Users must choose to set up Siri on an Apple Watch to interact with the voice assistant. The Raise to Speak option on Apple Watch Series 3 (and later) enables Siri with a motion instead of wake words. This setting can lead to more accidental activations.

You can read more about Siri, dictation, and privacy from Apple.

Security Features

Apple utilizes end-to-end encryption when transmitting requests to prevent unauthorized access to data. They store some Siri data on iCloud servers. While Apple encrypts data at rest on these servers, they possess the decryption keys.

You can also customize Siri to your preference for greater security. For example, users can disable the Allow Siri When Locked setting on their device. This feature blocks the voice assistant from processing sensitive data without using Face ID or entering a passcode first.

User Controls

Apple gives users complete control over their data. You can delete Siri data or turn off the voice assistant anytime. There is no hassle.

To delete data linked to Siri on an iPhone:

- Open Settings.

- Tap Siri & Search

- Tap Siri & Dictation History.

- Select Delete Siri & Dictation History.

To turn off Siri on an iPhone:

- Open Settings.

- Tap Siri & Search

- Disable Press Side Button for Siri and Allow Siri When Locked.

- Tap Listen for.

- Select Off

What We Know About Google Assistant

General Information

Popular Devices with Google Assistant:

- Pixel smartphones, tablets, watches, and earbuds

- Nest Hub, Audio, cameras, doorbells, locks, and thermostats

- Chromecast with Google TV

Default Wake Words: “Hey Google”.

Privacy Policy (July 2024)

Google clearly states that it collects relevant data to improve its products and services. Nonetheless, they stress their commitment to protect your privacy. To that end, Google insists they will never share data with third-party advertisers. Similarly, they do not analyze or save voice recordings unless users opt into human reviews. Even if you agree to grading, you can choose how long Google stores those recordings.

However, Google links your voice assistant to your account. That means Google Assistant can access personal data like full name, address, billing info, and other private details. In addition, Google does not use random identifiers. All data collected by the voice assistant is associated with your Google Account. The connection makes tracking and profiling users for personalized ads much easier.

Google serves these results through its own ad platform. This integrated approach supports Google’s claims that they do not disclose confidential data to third-party marketers. Targeted ads appear across Google’s network, including Search, Gmail, and YouTube.

Google may also share data with specific partners. For example, if you call a Google Partner from an ad, Google may share your phone number with the service.

While Google does work with third-party data processors, they state that privacy principles bind these companies’ conduct.

For its part, Google acknowledges the importance of user privacy and pledges to update and improve its policy regularly.

You can learn more about Google’s privacy policy here.

Security Features

Data collected and transmitted by Google Assistant has layers of protection. Google encrypts all data that moves between your smart device and its services. This encryption prevents data from being intercepted as it leaves your home and arrives in the cloud for processing or storage. Google also encodes data at rest by default, but they manage their decryption keys.

These safety features help keep your data secure against cyber threats.

User Controls

Google has made strides with choice and transparency. They allow users to customize their devices to match their comfort and needs. You can adjust how Google Assistant works with your data at any time. Some controls include limits on personal data collection, web activity, and voice settings.

To delete all Google Assistant activity:

- Sign in to your Google Account.

- Visit your Google Assistant’s Activity page.

- Select More.

- Select Delete under interests and notifications.

- Select Delete to confirm.

Note: It may take a day before the deletion syncs with other devices.

To delete recent Google Assistant activity, say any of the following phrases:

- “Hey Google, that wasn’t for you.”

- “Hey Google, delete my last conversation.”

- “Hey Google, delete today’s activity.”

- “Hey Google, delete this week’s activity.”

To modify the Web & Activity settings of your account:

- Sign in to your Google Account on your computer.

- Click your profile icon in the top-right corner of the browser.

- Click Manage your Google Account.

- Select Privacy & personalization.

- Select Web & Activity under History settings.

- Click the Google Assistant icon in the scrolling menu.

- Click Saving to Web & Activity.

- Select Turn off from the drop-down menu.

To stop Google from linking audio recordings to your account:

- Launch the Google Home app.

- Tap your profile icon.

- Select My Activity.

- Select Saving Activity.

- Toggle Include Audio Recordings off.

To adjust wake word sensitivity and prevent accidental activations:

- Launch the Google Home app.

- Select the desired device.

- Tap Device Settings.

- Select “Hey Google” sensitivity.

- Adjust the settings from Least sensitive to Most sensitive.

To disable the Continued Conversation feature:

- Launch the Google Home app.

- Tap your profile icon.

- Select Assistant Settings.

- Select Continued Conversation.

- Toggle the feature off on the desired devices.

Note: Continued Conversation extends active listening periods to allow for follow-up questions.

What We Know About Alexa

General Information

Popular Devices with Alexa:

- Amazon Echo

- Amazon Echo Show

- Amazon Echo Hub

- Amazon Fire tablets and TVs

- Ring cameras and doorbells

Default Wake Words: “Alexa”.

Privacy Policy (July 2024)

Amazon has faced plenty of criticism and legal battles over its Alexa virtual assistant. Still, Amazon continues to incorporate Alexa into more of their products, from speakers and smart home devices to eyeglasses.

Amazon openly states it collects data to improve and personalize the user experience. As Amazon gathers info and learns preferences, Alexa can provide more tailored answers, advice, and services. In addition, the company stores voice recordings and text transcripts of Alexa interactions until they receive a deletion request. These recordings are subject to human grading to improve Alexa.

It can also infer intimate details from interactions. For example, asking Alexa to read a sacred text could reveal your religious beliefs. Adding items to a baby or wedding registry could communicate your family or relationship status. Telling Alexa to turn off bedroom lights at a specific time and set an alarm could unveil your sleep schedule.

Furthermore, Amazon allows third parties to use Alexa to collect data for themselves. These third parties include developers for Alexa Skills (Amazon’s branding for apps) and marketers. Amazon claims they have no control over cookies and other features that third parties may use. Moreover, asking Alexa to delete your data will not affect what third parties have gathered. Those companies can still store and sell your data unless they are explicitly notified.

All of that data helps build comprehensive user profiles for interest-based ads.

Despite that, the company has made some progress in strengthening its privacy policy.

Amazon maintains that it intends to minimize data collection, provide more transparency, and improve the process. For instance, users can view the permissions they have given a third-party developer and update their Alexa settings. That option also extends to managing voice recordings and interest-based ads across connected devices. The e-commerce giant even altered Alexa products in response to feedback. Now, users can disconnect microphones and shutter cameras for peace of mind.

Amazon outlines its privacy policy and Alexa terms of use here.

Security Features

Amazon features end-to-end encryption as well. The encoding process protects your data as it travels from an Alexa device to Amazon’s cloud. Data stored in the cloud is encrypted, but Amazon creates and controls the keys. Amazon indicates that all Alexa devices undergo regular security audits and receive support for two years after being discontinued. Overall, these measures inspire confidence.

However, Amazon does not clearly specify whether third parties with access to your personal data adhere to the same standards.

User Controls

To delete Alexa voice recordings:

- Launch the Alexa app.

- Tap the More tab at the bottom of the screen.

- Choose Settings.

- Select Alexa Privacy.

- Go to Review Voice History.

- Select the desired recordings.

- Select Delete all of my recordings.

To delete Alexa recordings by voice:

- Launch the Alexa app.

- Tap the More tab at the bottom of the screen.

- Choose Settings.

- Select Alexa Privacy.

- Go to Manage Your Alexa Data.

- Toggle the Enable deletion by voice setting on.

- Say “Alexa, delete everything I’ve ever said” to erase all recordings.

- Say “Alexa, delete what I just said” to erase recordings from the past ten minutes.

To disable all voice recordings on Alexa:

- Launch the Alexa app.

- Tap the More tab at the bottom of the screen.

- Choose Settings.

- Select Alexa Privacy.

- Go to Manage Your Alexa Data.

- Select Choose how long to save recordings under Voice Recordings.

- Select Don’t Save Recordings.

To opt out of human reviews of Alexa recordings:

- Launch the Alexa app.

- Tap the More tab at the bottom of the screen.

- Choose Settings.

- Select Alexa Privacy.

- Go to Manage Your Alexa Data.

- Select Help improve Alexa.

- Toggle the Use of Voice Recordings setting off.

To stop Alexa from sharing personal data with advertisers:

- Launch the Alexa app.

- Tap the More tab at the bottom of the screen.

- Choose Settings.

- Select Alexa Privacy.

- Go to Manage Your Alexa Data.

- Toggle the Interest-Based Ads from Amazon on Alexa setting off.

To stop third-party Alexa Skills from collecting personal data:

- Launch the Alexa app.

- Tap the More tab at the bottom of the screen.

- Choose Settings.

- Select Alexa Privacy.

- Go to Manage Skills and Ad Preferences.

- Determine what Skills can access personal data.

- Toggle the desired permissions off.

Even with these controls, some users still find it difficult to ignore Amazon’s prior failures to delete data.

What We Know About Other Voice Assistants

While Siri, Google Assistant, and Alexa dominate the smart device market, there are other options for people.

Bixby, Samsung’s virtual assistant, works across most Galaxy devices. Samsung claims Bixby learns about you and works with your essential apps and services to deliver a connected experience. However, Bixby doesn’t divulge many details, leaving users with questions about what data it might collect and share. Samsung’s privacy policy briefly mentions Bixby twice, assuring users it cannot access their biometric data through the voice assistant.

Released in 2014, Microsoft’s Cortana helped users coordinate tasks within Windows and perform searches on Bing. The voice assistant was officially retired in favor of Microsoft Copilot in June 2024. Copilot is a generative chatbot that runs on OpenAI’s ChatGPT model to assist users with a wide range of requests. Many observers expect that Copilot will feature heavily in future Windows builds. Its integration into the world’s most popular operating system is worth watching closely from a data collection and usage standpoint.

More smart devices are likely to emerge, given the commercial success of Siri, Google Assistant, and Alexa and the continued development of AI. Consumers should advocate for robust, transparent privacy policies as they embrace the next generation of voice assistants.

Tips To Protect Your Privacy

Devices with Siri, Google Assistant, and Alexa undeniably make life easier. In fact, 77% of respondents claimed they would be more likely to use voice assistants with enhanced privacy protections.

You don’t have to surrender all of your privacy rights to adopt the technology, though. Even now.

Follow these tips to protect your privacy when using voice assistants.

1. Review the device’s settings. Determine what data the voice assistant gathers. Learn how it shares that data. Understanding the device and all of its user controls is critical.

2. Limit data collection and usage. Choose settings that let the device collect the minimum amount of data needed to fulfill its purpose. See our user controls above. Repeat the process for relevant third-party apps.

3. Delete voice recordings. Do not store more recordings in the cloud than required to function. Most voice assistants allow you to erase recordings automatically.

4. Disable the microphone or camera when inactive. Many devices have physical switches to mute the microphone or shutter the camera. Check your settings if you cannot turn them off by sliding a switch.

5. Secure your account. Create a strong, unique passcode and enable biometric or two-factor authentication. Update the firmware of vulnerable devices.

6. Use with discretion. Do not ask the assistant to perform tasks that require financial or sensitive details. Do not ask private questions in public.

7. Stay up to date. Privacy policies change often. Know when your voice assistant’s terms and conditions are revised and adapt as needed. Awareness is even more crucial as we enter the era of AI.

None of these methods ensure your private data remains that way. However, they minimize your digital footprint and offer more protection for your valuable data.

Take Control of Your Personal Data

Ultimately, using smart devices is an individual choice that comes down to convenience and customer trust.

The overlap of users who feel in control of their data without adjusting settings shows the public might not appreciate the consequences. Excessive data collection and usage could expose people to unwanted ads and profiling or unauthorized access to confidential information.

The simplest, surest way to avoid sharing sensitive data with a voice assistant is never to use one.

But, if you can’t imagine life without a virtual assistant, you should learn how to safeguard your data. Adjusting user controls to fit your preferences is an effective strategy to prevent unnecessary data collection and third-party sharing.

Take the time to protect your personal data.